Medicine is the science and art of preserving health and treating illness. Medicine is a science because it is based on knowledge gained through careful study and experimentation. It is an art because its success depends on how skillfully medical professionals apply their knowledge in dealing with patients.

The goals of medicine include saving lives, relieving suffering, and maintaining the dignity of sick people. These goals have made medicine one of the most respected professions. Thousands of men and women who work in medicine spend their lives caring for the sick. When disaster strikes, health workers rush emergency aid to the injured. When epidemics threaten, doctors and nurses work to prevent the spread of illness. Medical researchers search continually for better ways to prevent and treat disease.

Illness has existed since human beings and their ancestors first appeared on earth. Through most of human existence, people knew little about how the body works or what causes disease. Treatment was based largely on superstition and guesswork. People slowly gained their first accurate ideas about health and illness as the great ancient civilizations arose in the Middle East, India, China, Greece, and Rome.

In the last few centuries, medicine has progressed greatly. Medical workers have improved health care for adults and children and brought many infectious diseases under control. Thousands of new drugs and procedures have been developed. These advances, combined with better nutrition, sanitation, and living conditions, have given people a longer life expectancy. In the mid-1900’s, most people did not live past the age of 50. Today, the worldwide average life span is about 72 years.

Medical care is often considered part of the larger field of health care. In addition to medical care, health care includes the services provided by dentists, clinical psychologists, social workers, physical and occupational therapists, and many other professionals. This article deals chiefly with the kind of health care provided by doctors and by people who work with doctors.

Elements of medical care

Medical care has three main elements: (1) prevention, (2) diagnosis, and (3) treatment.

Prevention

of disease is a broad social goal that requires the cooperation of governments, medical workers, and patients. Preventing illness avoids suffering and costs less than treating many ailments. Some wide-ranging preventive measures, such as purifying drinking water, are most effectively carried out by governments. Doctors provide many treatments that help patients avoid illness. For example, they vaccinate people against polio, measles, and other diseases. Another way that doctors prevent disease is by educating patients and explaining medical issues. Physicians also encourage patients to make healthy choices, such as eating a nutritious, low-fat diet and avoiding smoking. Most doctors recommend that patients have regular checkups. These examinations may prevent serious illness by enabling doctors to diagnose and treat disease in its earliest stages.

Diagnosis

is the process of identifying the condition or illness that causes a patient’s distress. In many cases, a variety of ailments could account for particular symptoms. For example, chest pain might be due to indigestion, muscle strain, or blockage of one of the heart’s main blood vessels. To make a diagnosis, doctors first gather information about a patient by taking a medical history and performing an examination. They may obtain more information with medical tests. Doctors then use their skill, experience, and judgment to arrive at the diagnosis that best accounts for all the facts.

Doctors obtain a medical history by questioning patients. They ask about the symptoms that brought the patient to the doctor, past illnesses, and the health of family members. They also ask about habits and practices that affect health, such as whether a patient smokes.

A physical examination provides more information about a patient’s condition. In a routine office examination, doctors can observe skin tone and evaluate the general condition of muscles and nerves. They can press and probe the body to check internal organs for unusual texture, size, or shape. Certain instruments can be used routinely to reveal conditions inside the body. For example, a stethoscope enables doctors to listen to a patient’s chest and hear abnormalities in heartbeat or breathing.

When the history and routine examination do not provide enough information to make a diagnosis, a doctor may order more advanced tests. For example, such technologies as magnetic resonance imaging (MRI) can provide extremely detailed views of internal organs. Laboratory tests also aid diagnosis with chemical analysis or microscopic examination of blood, other body fluids, and tissues. If a diagnosis is difficult, a doctor may seek opinions from other doctors.

Treatment.

Correct diagnosis provides the basis for successful treatment. Sometimes no treatment is required, and a doctor simply reassures a patient that the body will heal itself. At other times, prompt and accurate treatment prevents suffering and deadly complications. For example, early appendicitis usually causes a somewhat vague symptom—stomach pain near the navel that may stop, then start again. But delay in diagnosing and removing a diseased appendix may lead to widespread infection or, in extreme cases, death.

The number of treatments available grows constantly as medical knowledge advances. Some of the dozens of common treatments are listed under the Treatment section in the Related articles list at the end of this article.

When doctors make an incorrect diagnosis or provide improper treatment, patients may sometimes sue them for malpractice. A successful malpractice suit proves that a doctor is guilty of an extremely serious medical mistake that qualifies as negligence. Negligence is treatment that falls below the level of care that patients should reasonably expect from a skilled, competent doctor.

Medical professionals

In the United States, Canada, the United Kingdom, and other developed countries, medicine has become more complicated as it has progressed. In most cases, a doctor makes the major decisions regarding care of patients. Traditionally, all doctors received general medical training and treated all types of patients. Sick people received most care in their homes from one doctor who treated the whole family. Then, during the late 1800’s and early 1900’s, hospitals began to provide a better environment than homes for seriously ill patients. Family doctors began to admit patients to hospitals to take advantage of such features as skilled nursing care and operating rooms. But family doctors continued to oversee care of their hospitalized patients single-handedly.

Specialty care physicians.

As medical knowledge progressed, physicians found it increasingly difficult to keep up with important advances in the whole field of medicine. Doctors began to spend their final years of training concentrating on a limited area of treatment. This narrow focus is called specialization. The area on which doctors focus is called their specialty. For example, cardiology is the specialty that deals with diseases of the heart and circulatory system. Specialization enables doctors to become experts in a limited area and keep up with advances in that area throughout their professional lives. The table Major medical specialties in this article lists some important fields of medical concentration.

Many patients now receive care from a doctor who is an expert in their illness or from teams of health professionals. For example, many cancer patients receive treatment from several doctors. Cancer specialists include medical oncologists who prescribe cancer drugs, radiation oncologists who shrink tumors with radiation, and surgical oncologists who have special skill in cancer surgery. Cancer care teams may also include such additional members as specially trained nurses, social workers, and rehabilitation counselors.

Primary care physicians.

Specialties that focus on providing basic medical treatment are called primary care specialties. Like yesterday’s family doctors, primary care specialists treat a wide range of ailments. But today’s primary care doctors receive more extensive training than did yesterday’s family doctors. Part of today’s training teaches primary care doctors to recognize cases that require the additional skills of a specialist. In the United States, primary care specialties include internal medicine, in which doctors focus on the general medical needs of adults; pediatrics, in which doctors specialize in treating children; and family practice, in which doctors care for all members of a family. In Canada, family practice is the only primary care specialty. In the United Kingdom, primary care doctors are called general practitioners.

Other health care professionals

in addition to doctors help provide medical care. Registered nurses work closely with doctors in clinics, hospitals, and offices. A growing number of nurses also work independently to provide many services formerly provided only by doctors. For example, nurse-practitioners are registered nurses with additional training and certification. Their additional qualifications enable them to provide many types of basic primary and specialty care. When doctors prescribe drugs, pharmacists fill the prescriptions and may give advice on the drugs prescribed. The section Other careers in medicine discusses various nonphysician medical workers.

Providing medical care

Providing health care for citizens is a major social goal in most countries. Governments concern themselves with medical care because healthy citizens are happier and more productive than sick people are. People who are ill may not be able to work, attend school, or perform other tasks important to national well-being. But as worldwide life expectancy increases, health care grows more expensive. More people become ill with ailments that tend to strike as people age, such as arthritis, cancer, and heart disease. Researchers constantly develop new drugs and procedures to treat these conditions. But many such treatments involve costly, long-term care.

Paying for medical care challenges societies in two important ways. First, countries must find a means to limit the total cost of health care to a reasonable portion of their national wealth. Societies must pay for health care and still have enough money for education, military defense, transportation, and other social needs.

Second, countries must protect individual citizens from severe financial hardship caused by the cost of serious illness. Providing such protection requires spreading the cost of medical care among large groups that include both sick and healthy people. Some nations pay for medical care with general taxes. General tax funding spreads medical costs among all of a nation’s taxpaying citizens. In other countries, citizens are protected with various types of public or private insurance. Insurance collects payments called premiums to spread costs among all the individuals in a particular insured group.

This section discusses the different ways that the United Kingdom, Canada, and the United States provide and pay for health care. A brief discussion of health care in other countries concludes the section.

The United Kingdom

has a health care system called the National Health Service (NHS) that provides medical care free to all citizens. The NHS is funded mainly by general taxes. Employed people make an additional contribution through a national insurance plan.

Everyone in the United Kingdom registers with a primary care doctor called a general practitioner. People can register with any general practitioner (GP) of their choice. Most GP’s work in small groups and see patients in offices called surgeries. People consult their GP’s whenever they need medical care. When a GP determines that a patient requires specialized care or treatment in a hospital, the GP refers the patient to a specialist. Patients cannot consult specialists without a referral from a GP except in an emergency. All specialists in the United Kingdom work in hospitals. When a specialist has completed a patient’s treatment, the specialist sends the patient back to the GP.

Both GP’s and specialists are paid by the National Health Service. In addition, the NHS provides many community support services, including nurses, ambulances, and certain types of home care.

The NHS holds down the cost of health care by emphasizing primary care and by limiting the number of hospitals. The number of doctors is also controlled by budget constraints and other factors that limit the number of admissions to medical schools. Specialists must go through additional training, then compete for a hospital post in their specialty when a position opens. The NHS controls where GP’s can practice to ensure that medical care is available in all geographic areas.

Canada

has a national medical care program that is commonly called medicare. (The United States also has a public health care program whose formal name is Medicare. The U.S. Medicare program is discussed in the United States section that follows.) Canadian medicare provides free care to all citizens through the joint efforts of the federal and provincial governments. The federal government gives general tax dollars to the provinces to finance medical care and certain other programs. Each province decides how to obtain the rest of the money needed to pay for its medicare program. Some provinces collect additional taxes. Others create required insurance programs for people who can afford to pay something.

Each province negotiates with doctors to determine the fees that medicare will pay for different services and procedures. Patients may see any doctor whenever they feel that they need medical care. The province then pays the doctor the fee agreed upon for the care given.

In Canada, family practice physicians are the only primary care doctors. Internal medicine and pediatrics are grouped with other specialties. Both family practitioners and specialists see patients in offices and hospitals. Patients may consult specialists directly, without a referral from a family practitioner.

Canada’s federal government and the provinces cooperate to coordinate training and placement of doctors. Medical schools in all the provinces have limits on the number of students they can accept each year. A national committee coordinates education after medical school to ensure that about half of Canada’s doctors are trained as family physicians. All of Canada’s hospitals are controlled by the provincial governments.

The provinces use various means to discourage doctors from flocking to large cities. City practice appeals to most doctors because cities have large, well-staffed hospitals and many other doctors to offer personal and professional support. But concentration of doctors in cities tends to leave rural areas without enough medical care.

Private clinics that do not receive government funding operate in some provinces. At these clinics, patients themselves must pay for medical services.

The United States

has no comprehensive national system for providing medical care. United States citizens receive medical care under many different private and public arrangements. The U.S. government plays a smaller role in providing health care than do the governments of most other developed countries.

The two principal government-operated health care programs in the United States are Medicare and Medicaid. Medicare is a national two-part program chiefly for adults over 65 years old. Medicare Part A, which pays for hospital care, is funded with a form of general taxes. Medicare Part B, which pays for doctors’ services, charges participants a monthly fee.

Medicaid is a program that pays doctor and hospital bills for people who receive public income assistance. Medicaid is funded with federal and state tax dollars and is administered by the states.

The most widespread U.S. health care plan is private health insurance. In some cases, individuals buy private insurance. But most people with private health insurance purchase it as members of a group. The most common groups are based in places of employment. Such groups include all the people employed by a particular company or all the workers in a government office.

Private health insurance became the dominant U.S. health care program about the time of World War II (1939-1945). During the war, the U.S. government enacted wage and price controls that prevented employers from giving workers raises. To attract workers, employers began to offer indirect forms of pay called benefits. Paying for employee health insurance quickly became one of the most attractive benefits that employers could provide. Because of its popularity, the practice continued after the government lifted wage and price controls. Most employers still pay all or part of the cost of health insurance for their workers.

Public and private health care programs in the United States use two main methods of paying for health care: (1) fee-for-service plans and (2) managed care systems. In addition, U.S. citizens pay personally—also called out-of-pocket payment—for much of their medical care.

Fee-for-service plans

pay doctors and hospitals for individual medical procedures. Fee-for-service payment was the most common system of paying for care from the end of World War II to the 1990’s. People with fee-for-service insurance freely consult any primary care doctor or specialist of their choice. The chosen doctors decide what care the patient needs, provide treatment, and submit bills for each medical service. Hospitals submit similar itemized bills. The health care plan then pays doctors and hospitals based on the amounts charged. Most fee-for-service plans do not pay for some services and pay only a portion of the cost of others.

Medicare and Medicaid have traditionally somewhat resembled fee-for-service programs. Medicare and Medicaid patients are free to choose their own doctors. But both programs pay doctors according to fee schedules decided by the government. Medicare pays hospitals by classifying ailments into a system of hundreds of categories called diagnosis-related groups (DRG’s). Medicare pays a fixed amount for each DRG by calculating the average cost of treating that ailment.

Many Medicaid fees fall considerably below doctors’ usual charges. Because of these lower fees, some doctors and hospitals will not accept Medicaid patients.

Fee-for-service medicine offers patients great personal choice and doctors great independence. But choice and independence also make the system expensive. As in other countries, an aging population and advancing medical technology are increasing the cost of medical care. The fee-for-service system tends to encourage unrestricted use of medical services. The United States spends a higher percentage of its national wealth on medical care than does any other country. Efforts to reduce the percentage of U.S. wealth spent on medical care have centered on a system called managed care.

Managed care

tries to reduce costs by coordinating care and controlling patients’ ability to use doctors and services. One important type of managed care plan is a health maintenance organization (HMO). An HMO controls costs by requiring patients to choose approved doctors and hospitals. These doctors and hospitals have agreed to see HMO patients for reduced rates. In return, the doctors and hospitals receive a steady flow of patients sent by the HMO.

In addition, patients in many managed care programs must first see a primary care doctor for a referral to a specialist. Primary care doctors who control referrals to specialists are commonly called gatekeepers. HMO’s also require both primary care doctors and specialists to seek approval for most treatments, and some treatments are not approved. The organizations usually set limits on the drugs doctors can prescribe and impose other restrictions. Many HMO plans cost less than fee-for-service plans and cover more types of care.

Another common managed care plan is called a preferred provider organization (PPO). A PPO is a loose network of doctors and hospitals who have agreed to care for PPO members at reduced rates. The doctors and hospitals who participate in the network are called preferred providers. Patients in a PPO can choose their doctors. But they must select one from among the preferred providers or pay a higher fee.

Many employers prefer to offer employees managed care insurance because it costs less than fee-for-service insurance. Both Medicare and Medicaid have begun experimenting with managed care to control costs. Some managed care patients also appreciate the lower costs and wider range of covered services. But other patients object to the limited choice imposed by such plans. In addition, many doctors are concerned about HMO review of their decisions and restrictions on treatment.

United States citizens pay directly—in other words, pay out-of-pocket—for much of the health care that they receive. Many insurance programs do not cover certain services, and many require patients to pay a portion of covered charges. A patient’s portion of charges is called a co-payment. In addition, the United States is the only developed country in which large numbers of people have no medical coverage of any kind. Part-time workers form one large group of uninsured citizens. Many employers do not offer health insurance to part-time employees because of its cost. Many of these workers earn too much to qualify for Medicaid. But they do not make enough to be able to afford a costly individual insurance policy. How to solve the problem of lack of insurance is a topic of active debate in the United States.

Other countries.

Most industrialized countries have some type of comprehensive system that provides medical care for most citizens. Some nations pay for these systems with taxes. Others fund health insurance by requiring citizens to participate in national health insurance programs.

Developing countries face a particularly severe challenge in providing medical care. These nations struggle with serious shortages of money, medical facilities, and doctors and other health care workers. Neither the governments nor the citizens have the resources to pay for adequate levels of care. As in industrialized countries, life expectancy has increased. As people age, many fall ill with the age-related illnesses common elsewhere in the world—cancer, heart disease, and arthritis. In addition, many people, especially in Africa and Latin America, suffer from infections and parasites that are well controlled in richer countries.

Improving the quality of medical care

Two of the chief groups that work to improve the quality of care are (1) medical researchers and (2) medical organizations. In addition, computers and other technology enable medical professionals to learn about the latest approaches to medical care.

Medical researchers

experiment and observe to gain new knowledge about health and disease. Discoveries made through research make important contributions to medical progress. Governments, major universities, private foundations, and drug companies provide funds to support research. Many medical researchers are physicians. Others have advanced degrees in biology, chemistry, or other sciences. Three main types of research include (1) basic laboratory research, (2) translational research, and (3) clinical research.

Basic laboratory research

investigates fundamental biological questions. One extremely active area of basic research explores genes (the hereditary material in cells). Genes control all the life processes in living things, including how cells grow, divide, and communicate with other cells. Scientists worldwide are working to understand how genes function.

Knowledge gained through basic laboratory research may not apply immediately to medical practice. But basic researchers hope that their discoveries will lead eventually to improvements in treating or preventing disease.

Translational research

aims at translating (transferring) discoveries from the laboratory to patient care. Translational research tries to find medical uses for knowledge gained through basic research. For example, basic researchers studying breast tissue might discover that many breast cancers have a particular abnormal gene. Translational research would then focus on finding ways that this discovery could help patients. Researchers might try to develop a blood test that would show whether people have the damaged gene. Identifying the damaged gene before individuals developed cancer might lead to new ways to prevent the disease or treat its earliest stages.

Clinical research

tests the safety and effectiveness of treatments in living organisms. Investigators conduct clinical research after preliminary research strongly indicates that a treatment may help patients. For example, basic research might identify a chemical that prevented cell division in tissue samples. Translational research would then try to develop a form of the chemical that could be used as a cancer drug. Cancer cells divide rapidly, and many cancer drugs work by interfering with this rapid multiplication. If a promising drug were developed, clinical researchers would conduct tests to ensure that the drug was safe. After its safety was established, more clinical research would determine if the new drug could actually help shrink tumors in cancer patients.

Medical organizations.

Many national and international organizations work to improve the quality of medical care. National medical associations include the American Medical Association, the British Medical Association, and the Canadian Medical Association. Most national associations focus their efforts on education and professional development. They also represent medical interests before governments and other policymaking bodies. Many national associations participate in the World Medical Association. This international federation is dedicated to medical education, science, ethics, and health care for people worldwide.

One of the most prominent international associations is the World Health Organization (WHO), the health agency of the United Nations. WHO believes that health is a fundamental human right for all people. The agency operates many programs dedicated to obtaining the best possible health care for everyone, especially citizens of developing countries.

Medical organizations at all levels work to encourage professional development and the exchange of medical information. They publish journals that describe the latest research. They sponsor professional conferences where participants meet and share information. Organizations for individual medical specialties play a particularly strong role in providing information and professional support for their members. For example, the American College of Cardiology is a professional society for physicians and scientists who specialize in diseases of the heart and circulatory system.

Computers and electronic communication

offer important ways to share information and advance medical knowledge. Like other scientists, doctors benefit tremendously from the internet. The internet eliminates geographic barriers and makes information available in remote locations throughout the world. Many medical publications are available online. For example, computer users can electronically search and read thousands of medical journals in the U.S. National Library of Medicine.

Doctors and other medical workers also use the internet to communicate with one another. Electronic communication provides the means to instantly share X rays, lab results, and other aids in diagnosis. Some doctors even use email or smartphone applications to communicate with patients.

In many places, widespread internet access has made telemedicine common. In a telemedicine appointment, a doctor provides medical care remotely by communicating with a patient on a voice or video call. Telemedicine is especially advantageous when it would be impractical or risky for a patient to visit a doctor’s office in person. For example, people who live far from a doctor’s office can receive some medical care without having to travel. Patients with contagious diseases, such as COVID-19, can see their doctor without exposing others to possible infection.

Careers in medicine

The profession of physician is perhaps the best-known medical career. Many other kinds of workers, however, help provide medical care.

A career as a doctor.

Becoming a physician can provide a personally satisfying, highly respected career. In many developed countries, medicine is a well-paid profession. But becoming a doctor requires many years of challenging study in classrooms, in laboratories, and with patients. This section discusses physician training in the United States, Canada, and the United Kingdom.

In the United States, Canada, and the United Kingdom, doctors receive their professional education in medical school. In all three countries, most medical schools grant the doctor of medicine (M.D.) degree. In the United States and the United Kingdom, some schools grant the doctor of osteopathy (D.O.) degree. Osteopathy is a system of medical care founded in the late 1800’s to emphasize the importance of the body’s bones, muscles, ligaments, and tendons. Osteopathic medicine now includes all the treatments of modern medicine. D.O.’s are considered to have the same professional skills and responsibilities as M.D.’s.

In Canada and the United States, most students prepare for medical school by earning a college degree. The college work that comes before medical school is called premedical training or simply premed. Premedical training includes biology, chemistry, physics, and mathematics to equip students to understand the scientific and technical aspects of medicine. Most premed students also study languages, literature, history, and other subjects that help them understand human experience and appreciate patients as people. Doctors must be able to communicate clearly and kindly about complicated medical topics and matters of life and death.

Medical school in the United States and Canada lasts four years. In the United Kingdom, medical school begins directly after secondary school and lasts five years. In all three countries, students spend their first years in medical school studying sciences that form the foundation of medicine. These sciences include biochemistry (the chemistry of living things), anatomy (study of the body’s structure), and physiology (the science of how cells, organs, and the body function). In later years of medical school, students receive their first clinical training. In clinical training, students learn by caring for patients under the supervision of experienced doctors.

After medical school, doctors-in-training enter advanced clinical programs to increase their skill and experience in treating patients. Students in the United Kingdom spend a sixth year after medical school gaining additional basic clinical skills before they enter advanced training. Depending on the medical field they want to enter, students may spend up to six or seven years in higher clinical training.

Doctors gain certification to practice medicine by passing national examinations that demonstrate their basic medical knowledge and skill in specialty areas. All the states of the United States and provinces of Canada grant licenses to practice medicine. In the United Kingdom, an agency called the General Medical Council admits qualified doctors to a national registry.

Other careers in medicine.

Many people, in addition to physicians, play key roles in providing medical care. In many cases, successful care of patients depends on the efforts of a wide range of medical workers. See the articles on Nursing and Pharmacy for detailed information about these important medical occupations.

Physician assistants,

also called PA’s, are trained to provide basic medical care under the supervision of doctors. They take medical histories, perform routine examinations, order and interpret tests, and make a preliminary diagnosis. Many PA’s work in primary care. Others assist in such specialties as surgery and emergency medicine. Physician assistants are not registered nurses. Most receive their training in a program that leads to a college degree. Others earn a degree in another field, then enter a physician assistant program after college.

Physical therapists and other specialty therapists.

Several kinds of therapists provide specialized care or activities that form an important part of many treatment plans. Physical therapists use such physical means as light, heat, and exercise to improve patients’ ability to move or function normally. Occupational therapists help people with illnesses or disabilities develop, recover, or maintain skills necessary for work or daily living. Recreational therapists guide patients in games, dance, arts and crafts, or other enjoyable activities to maintain physical and emotional well-being. Respiratory therapists provide evaluations and treatments for patients with breathing disorders.

Medical social workers

help patients and their families cope with the social and emotional aspects of illness. They provide counseling and support and help patients arrange for care after leaving the hospital.

Technicians and technologists.

A wide variety of medical occupations require high levels of particular technical skills. For example, medical technologists perform difficult and highly specialized laboratory tests. They also supervise laboratory technicians, who perform more routine laboratory tasks. Radiologic technologists prepare patients for X rays and other imaging procedures, such as ultrasound, computed tomography (CT), and magnetic resonance imaging (MRI). Emergency medical technicians (EMT’s), also called EMT-paramedics, drive specially equipped vehicles to the scenes of emergencies. They administer urgent care, then transport patients to medical facilities.

History

In prehistoric times, people believed that angry gods or evil spirits caused disease. Curing the sick required calming the gods or driving the evil spirits from the body. The priests who tried to soothe the gods or drive out the evil spirits became the first professional healers of the sick.

The first known surgical treatment was an operation called trephining << trih FYN ihng >>. Trephining involved using a stone instrument to cut a hole in a patient’s skull. Scientists have found fossils of trephined skulls as much as 10,000 years old. Early people may have performed the operation to release spirits believed responsible for headaches, mental illness, or epilepsy. Trephining may actually have brought relief in some cases by releasing pressure in the head. Surgeons still use a similar procedure to relieve some types of pressure on the brain.

Prehistoric people probably also discovered that many plants can be used as drugs. For example, the use of willow bark to relieve pain likely dates back thousands of years. Today, scientists know that willow bark contains salicin, a substance related to the active ingredient in aspirin.

The ancient Middle East.

By about 3000 B.C., the Egyptians, who had developed one of the world’s first great civilizations, began making important medical progress. The world’s first physician known by name was the Egyptian Imhotep, who lived about 2650 B.C. The Egyptians later worshiped him as the god of healing. About 2500 B.C., Egyptian surgeons produced a textbook that told how to treat dislocated or fractured bones and external abscesses, tumors, and wounds.

Other ancient Middle Eastern civilizations also contributed to medical progress. The ancient Israelites, for example, made advances in preventive medicine from about 1200 to 600 B.C. The Israelites required strict isolation of individuals with gonorrhea, leprosy, and other contagious diseases. They also prohibited the contamination of public wells and the eating of pork and other foods that might carry disease.

China and India.

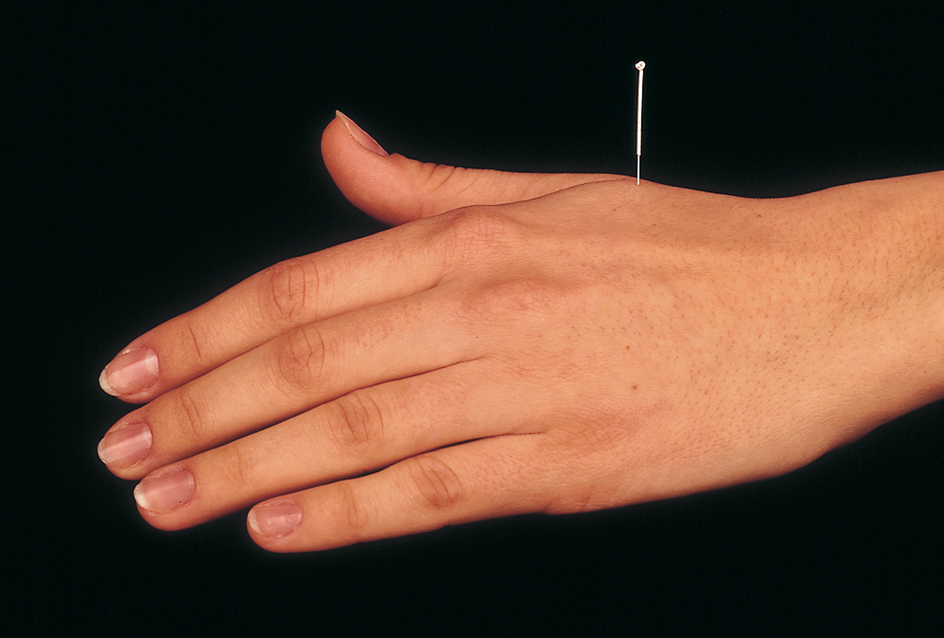

The ancient Chinese developed many medical practices that continue almost unchanged to the present day. Much of this traditional medicine is based on the belief that two principal forces of nature, called yin and yang, flow through the human body. Disease results when the two forces become out of balance. One technique that the Chinese developed to restore this balance was acupuncture. Practitioners of acupuncture insert needles into parts of the body thought to control the flow of yin and yang. Chinese doctors still practice acupuncture. The practice has gained popularity in Western countries, where it is used to treat nausea and some types of pain.

Traditional herbal cures and dietary practices in China also arouse interest today for their possible value in preventing disease. For example, breast cancer and colon cancer are rare in China and many other Asian countries. Many doctors think that one reason these cancers may be rare is that the typical Asian diet contains little fat.

In ancient India, the practice of medicine became known as ayurveda. It stressed prevention as well as the treatment of illness. By 600 to 500 B.C., practitioners of ayurveda had developed impressive knowledge of drugs to treat illness and of surgery. Indian surgeons successfully performed many kinds of operations, including amputations and a form of plastic surgery to reconstruct injured noses and ears.

Greece and Rome.

The civilization of ancient Greece reached its height during the 400’s B.C. During this peak, sick people seeking cures flocked to temples dedicated to Asclepius, the Greek god of healing. At the same time, the great Greek physician Hippocrates began showing that disease has natural rather than divine causes. He thus became the first known physician to consider medicine a science and art separate from the practice of religion. Hippocrates’s high ideals are reflected in the Hippocratic oath, an expression of early medical ethics. But the oath was probably composed from a number of sources rather than written by Hippocrates himself. For the text of the oath, see the article Hippocrates.

After 300 B.C., the city of Rome gradually conquered Egypt, Greece, and much of the rest of the civilized world. The Romans borrowed most of their medical knowledge from Egypt and Greece. The Romans’ own medical achievements occurred largely in public health. For example, they built large water channels called aqueducts to carry fresh water to Rome. They also built an excellent sewer system.

The Greek physician Galen, who practiced medicine in Rome during the A.D. 100’s, made the most important contributions to medicine in Roman times. Galen performed experiments on animals and used his findings to develop the first medical theories based on scientific observations. He is considered the founder of experimental medicine. Galen wrote numerous books about medicine, anatomy, and physiology. As time passed, many of Galen’s many theories were proved false. But some of his ideas continued to influence doctors into the 1500’s.

During the Middle Ages,

which lasted from about the A.D. 400’s through the 1400’s, the Islamic Empire of southwest and central Asia contributed greatly to medicine. Al-Rāzī, a Persian-born physician of the late 800’s and early 900’s, wrote the first accurate descriptions of measles and smallpox. Avicenna, a Muslim physician of the late 900’s and early 1000’s, produced a vast medical encyclopedia called Canon of Medicine. It summed up medical knowledge of the time and accurately described meningitis, tetanus, and many other diseases. The work became popular in Europe, where it influenced medical education for more than 600 years.

A series of epidemics swept across Europe during the Middle Ages. Outbreaks of leprosy began in the 500’s and reached their peak in the 1200’s. In the 1300’s, a terrible outbreak of plague, now known as the Black Death, killed from one-fourth to one-half of Europe’s people. Throughout the medieval period, smallpox and other diseases struck hundreds of thousands of people.

The chief medical advances in Europe during the Middle Ages were the founding of many hospitals and the first university medical schools. Christian religious groups established hundreds of charitable hospitals for people with leprosy. In the 900’s, a medical school was established in Salerno, Italy. It became the main center of medical learning in Europe during the 1000’s and 1100’s. Other medical schools became part of newly developing universities during the 1100’s and 1200’s, such as the University of Bologna in Italy and the University of Paris in France.

The Renaissance.

A new scientific spirit developed during the Renaissance, the great cultural movement that swept across Western Europe from about 1300 to the 1600’s. Before the Renaissance, people thought the study of religion was the most important branch of learning. During the Renaissance, the study of medicine and other sciences, art, literature, and history gained new significance. Before this time, most societies had strictly limited the practice of dissecting (cutting up) human corpses for scientific study. But laws against dissection were relaxed during the Renaissance. As a result, the first truly scientific studies of the human body began.

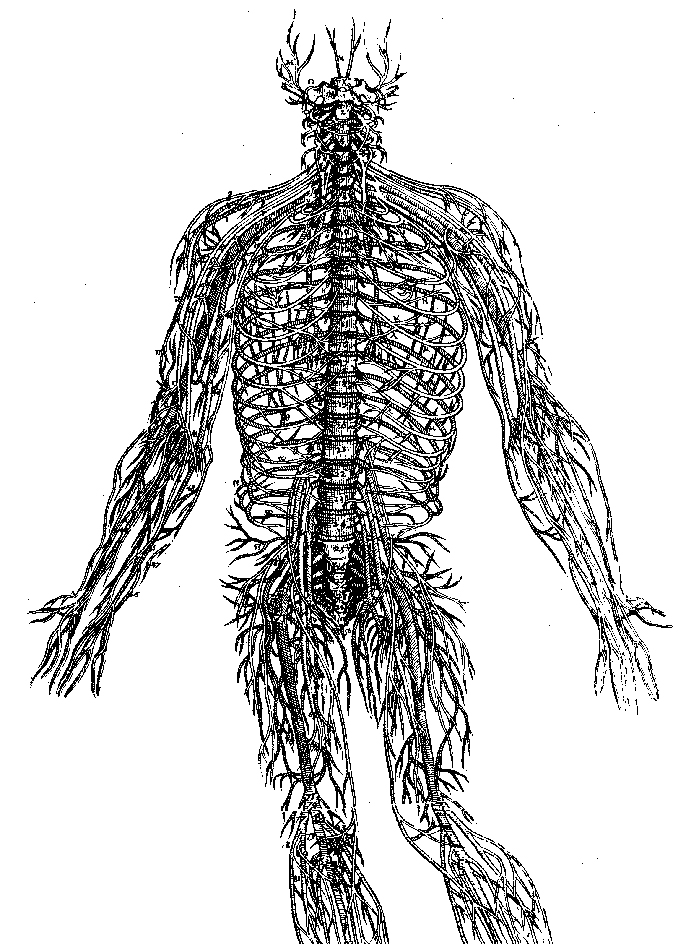

During the late 1400’s and early 1500’s, Italian artist Leonardo da Vinci performed many dissections to learn about human anatomy. He recorded his observations in a series of more than 750 drawings. Andreas Vesalius, a physician and professor of medicine at the University of Padua in Italy, also performed numerous dissections. Vesalius used his findings to write the first scientific textbook on human anatomy, a work called On the Structure of the Human Body (1543). This book gradually replaced the texts of Galen and Avicenna.

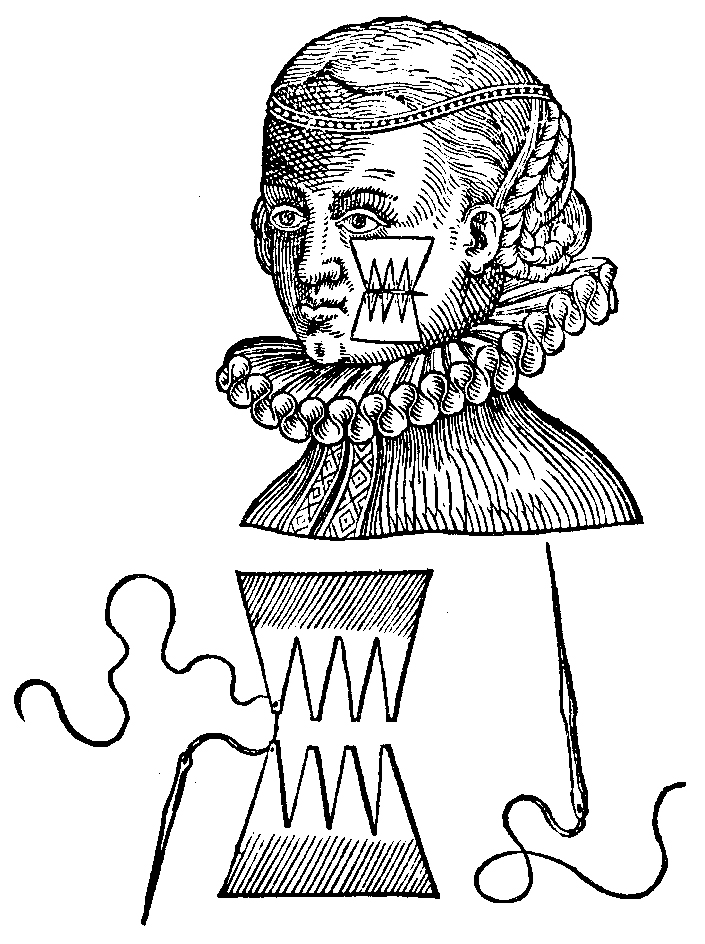

Other physicians also made outstanding contributions to medical science in the 1500’s. A French army doctor named Ambroise Paré is considered the father of modern surgery for his improvements in surgical technique. For example, Paré opposed the common practice of cauterizing (burning) wounds with boiling oil to prevent infection. Instead, he developed the much more effective method of applying a mild ointment and then allowing the wound to heal naturally. Philippus Paracelsus, a Swiss physician, stressed the importance of chemistry in the preparation of drugs. He pointed out that in many drugs, one ingredient canceled the effect of another.

The beginnings of modern research.

English physician William Harvey performed many experiments in the early 1600’s to learn how blood circulates through the body. Before Harvey, Galen and other scientists had studied circulation. But they had observed only parts of the process and invented theories to fill the gaps in their observations. Harvey studied the entire process. He observed the human pulse and heartbeat and performed dissections on human beings and other animals. Harvey concluded that the heart pumps blood through the arteries to all parts of the body. He further realized that the blood returns to the heart through the veins.

Harvey described his findings in An Anatomical Study of the Motion of the Heart and of the Blood in Animals (1628). His discovery of how blood circulates marked a turning point in medical history. After Harvey, scientists realized that understanding how the body works depends on knowledge of the body’s structure.

In the mid-1600’s, an amateur Dutch scientist named Anton van Leeuwenhoek << LAY vuhn hook >> saw tiny objects moving under his microscopes. He concluded correctly that these tiny objects were actually animals invisible to the naked eye. Today, such organisms are called microorganisms. They are also known as microbes or germs. Leeuwenhoek did not understand the role of microbes in nature. But his research led to later discoveries that certain microbes cause disease.

The development of immunology.

Immunology is the study of the body’s ability to resist disease. Developing ways to increase the body’s natural resistance has become one of the most important areas of modern medicine. A milestone in immunology occurred in the prevention of smallpox, one of the most feared and highly contagious diseases of the 1700’s. Smallpox killed many people and scarred others for life.

Doctors had known for hundreds of years that a person who recovered from smallpox developed lifelong immunity (resistance) to it. To provide this immunity, doctors sometimes inoculated people with matter from a smallpox sore. The doctors hoped that the inoculation would cause only a mild case. But some people developed a severe case of smallpox instead of a mild one. Other inoculated persons spread the disease.

In 1796, English physician Edward Jenner discovered a safe method of making people immune to smallpox. He inoculated a young boy with matter from a cowpox sore. The boy developed cowpox, a relatively harmless disease related to smallpox. But when Jenner later injected the boy with matter from a smallpox sore, the boy did not come down with that disease. His bout with cowpox had helped his body build up an immunity to smallpox. Jenner’s classic experiment was the first officially recorded vaccination.

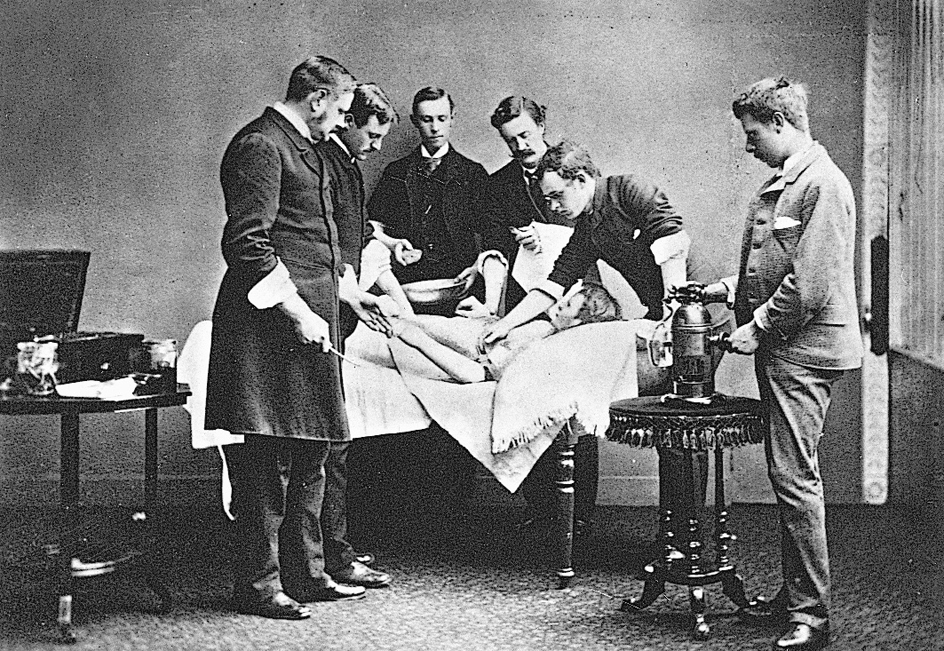

The first anesthetic.

For thousands of years, physicians tried to dull pain during surgery by administering alcoholic drinks, opium, and various other drugs. But no drug had proved really effective in reducing the pain and shock of operations. Then in the 1840’s, two Americans—Crawford Long and William T. G. Morton—discovered that ether gas could safely put patients to sleep during surgery. Long, a physician, and Morton, a dentist, appear to have made the discovery independently. With an anesthetic, doctors could perform many operations that they could not attempt on conscious patients.

Scientific study of the effects of disease,

called pathology, developed during the 1800’s. Rudolf Virchow, a German physician and scientist, led the field. Virchow believed that understanding a disease required understanding its effects on body cells. Virchow did important research in leukemia, tuberculosis, and other illnesses. Development of greatly improved microscopes in the early 1800’s made his studies possible.

Scientists of the 1800’s made dramatic progress in learning the causes of infectious disease. As early as the 1500’s, scholars had suggested that tiny invisible “seeds” caused some diseases. The microbes discovered by Leeuwenhoek in the 1600’s fit this description. In the late 1800’s, the research of Louis Pasteur and Robert Koch firmly established the idea called the microbial theory of disease. This idea is also called the germ theory.

Pasteur, a French chemist, proved that microbes are living organisms and that certain microbes cause disease. He also proved that killing specific microbes stops the spread of specific diseases. Koch, a German physician, invented a method for determining which bacteria cause particular diseases. This method enabled him to identify the bacterium that causes anthrax, a severe disease of people and animals. Anthrax bacteria became the first germs definitely linked to a particular disease. Koch also discovered the cause of tuberculosis.

Other research scientists followed the lead of these two pioneers. By the end of the 1800’s, researchers had discovered the kinds of bacteria and other microbes responsible for many infectious diseases. These illnesses included plague, cholera, diphtheria, dysentery, gonorrhea, leprosy, malaria, pneumonia, and tetanus.

Antiseptic surgery.

Hospitals paid little attention to cleanliness before the mid-1800’s. Operating rooms were often dirty, and surgeons operated in street clothes. Up to half of all surgical patients died of infections. In 1847, a Hungarian doctor, Ignaz Semmelweis, stressed the need for cleanliness in childbirth. But Semmelweis knew little about the germ theory of disease.

Pasteur’s early work on bacteria convinced an English surgeon named Joseph Lister that germs caused many deaths of surgical patients. In 1865, Lister began using carbolic acid, a powerful disinfectant, to sterilize surgical wounds. But this method was later replaced by a more efficient technique known as aseptic surgery. This technique involved keeping germs away from surgical wounds in the first place instead of trying to kill germs already there. Surgeons began to wash thoroughly before an operation and to wear sterilized surgical gowns, gloves, and masks.

Professional organizations and medical reform.

During the 1800’s and early 1900’s, doctors founded groups in the United States, Canada, and the United Kingdom to organize and reform the medical profession. In 1847, U.S. doctors founded the American Medical Association to help raise the nation’s medical standards. Partly as a result of the AMA’s efforts, the first state licensing boards were set up in the late 1800’s. The British Medical Association was founded in 1855, and the Canadian Medical Association in 1867. The National Medical Association was started in 1895 by African American doctors who felt discriminated against by the AMA. Osteopathic physicians founded the American Osteopathic Association in 1897.

In 1910, the Carnegie Foundation for the Advancement of Teaching issued a report called Medical Education in the United States and Canada. American educator Abraham Flexner wrote the report, which he based on detailed evaluations of all 155 medical schools in the two countries. The study, known informally as the Flexner Report, found that many schools failed to prepare students for the rapidly developing age of scientific medicine.

Flexner recommended improving the quality of medical schools by requiring entering students to have college-level preparation in basic sciences and humanities. He further urged that medical schools should provide advanced scientific study, well-equipped laboratories, and broad clinical experience with patients. Finally, medical schools should have strong relationships with universities and teaching hospitals. Flexner’s report helped bring far-reaching reforms to medical schools in the United States and Canada.

The medical revolution.

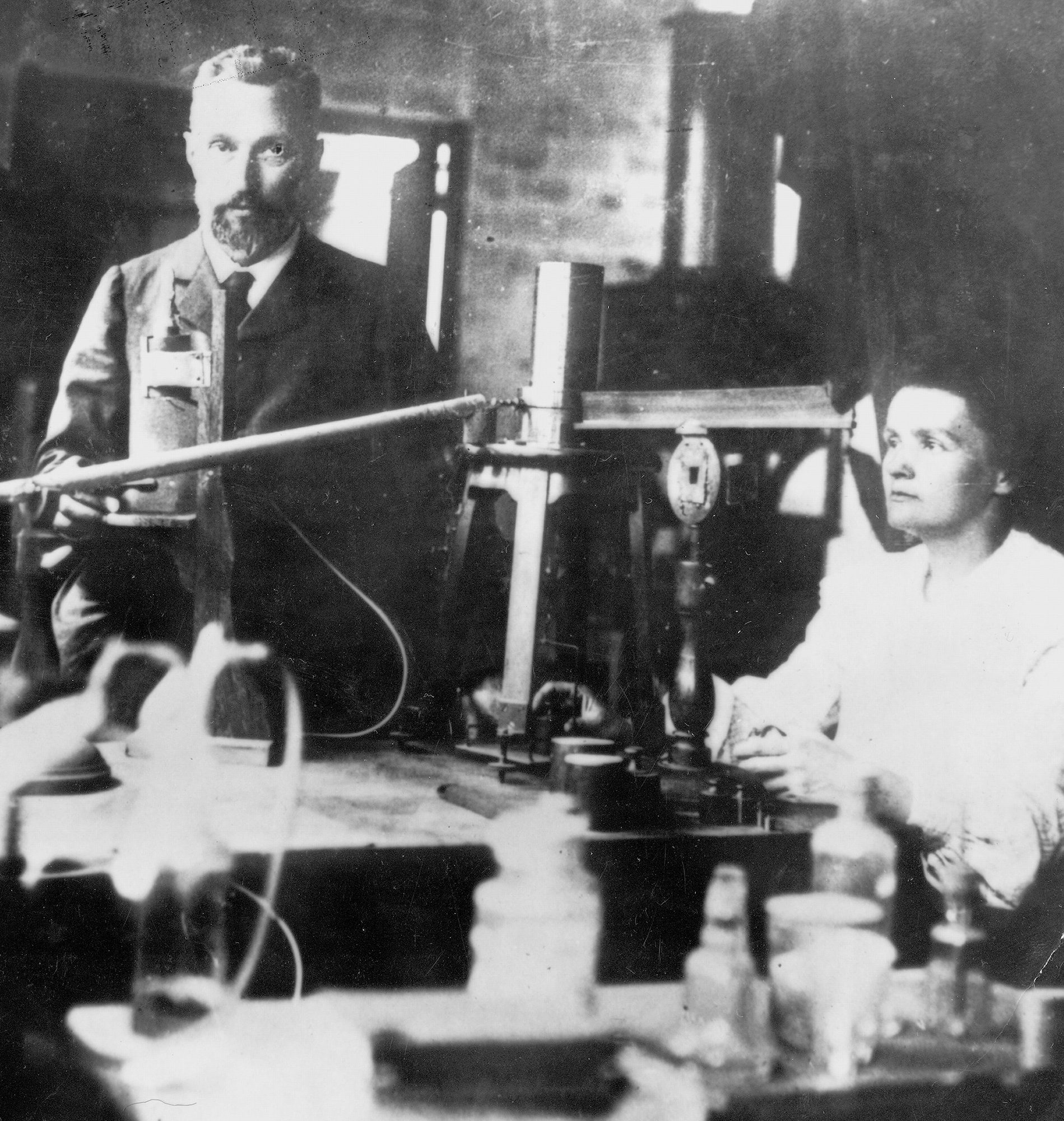

Advances in many fields of science and engineering created a medical revolution that began around 1900. German physicist Wilhelm Roentgen discovered X rays in 1895. X rays became the first of many technologies that enable doctors to “see” inside the human body to diagnose illnesses and injuries. Discovery of radium in 1898 by the French physicists Pierre and Marie Curie and the French chemist Gustave Bemont eventually provided a powerful weapon against cancer.

In the early 1900’s, Christiaan Eijkman of the Netherlands, Sir Frederick Hopkins of England, and a number of other physician-scientists showed the importance of vitamins. Their achievements helped conquer such nutritional diseases as beriberi, rickets, and scurvy. About 1910, the German physician and chemist Paul Ehrlich introduced a method of treatment called chemotherapy. Chemotherapy administers chemicals that have a more toxic effect on diseased cells than on healthy ones.

Ehrlich’s work greatly advanced drug research. In 1935, German doctor Gerhard Domagk discovered that a type of dye could cure infections in animals. His discovery led to development of bacteria-fighting compounds called sulfa drugs to treat diseases in human beings. In 1928, English bacteriologist Sir Alexander Fleming discovered the germ-killing power of a mold called Penicillium. In the early 1940’s, a group of English scientists headed by Howard Florey isolated penicillin, a product of this mold. Penicillin thus became the first antibiotic.

Since Domagk and Fleming made their historic discoveries, scientists have developed hundreds of antibiotics. These drugs have helped control the bacteria that cause many serious infectious diseases. Other drugs have been developed to treat such disorders as diabetes, high blood pressure, arthritis, heart disease, and cancer.

The development of new vaccines has helped control the spread of polio, hepatitis, measles, and other infectious diseases. During the 1960’s and 1970’s, the World Health Organization conducted a vaccination program that eliminated smallpox from the world.

During the 1980’s and 1990’s, scientists began to make significant progress in understanding genes (the hereditary material in cells). Researchers successfully identified the genetic defects involved in some diseases. The first tests were developed to enable doctors to determine if individual patients have a damaged gene in all their body cells. Doctors are hopeful that understanding genes will lead to new ways to treat or prevent disease.

Much progress in modern medicine has resulted from engineering advances. Engineers have developed a variety of instruments and devices to aid doctors in the diagnosis, treatment, and prevention of diseases (see Biomedical engineering). In many cases, doctors can now perform microsurgery through tiny incisions using small precision instruments. This technique reduces the trauma of surgery and shortens or avoids hospitalization. See Surgery (Technique). Engineers have made great progress in creating highly advanced replacements for worn or damaged body parts. These replacements include artificial limbs, joints, and heart valves. Imaging techniques, such as MRI, CT, and positron emission tomography (PET), produce amazingly detailed views of internal body structures.

The Nobel Prizes, first awarded in 1901, recognize important medical achievements with a prize for physiology or medicine. The table in the Nobel Prize article lists the scientists who have received the physiology or medicine prize and describes each achievement that earned the honor.

Current issues in medicine

Major issues in medicine today include the distribution of medical care, the financing of advanced medical care, and the growing role of genetics in medicine.

Unequal distribution of medical care.

The level of medical care varies tremendously throughout the world. In the richest countries, many people receive the most advanced treatment available. In poorer nations, citizens may lack even basic care. Many people are troubled that medical care can differ according to where people live and how much money they have. These people agree with the World Health Organization that medical care is a basic human right. But no one has found a way to pay for medical care for all of the world’s citizens.

Treatment of AIDS serves as a good example of how sharply medical care can vary from one country to another. In the United States and other developed countries, preventing and treating AIDS has become a major medical focus. Educational programs alert people about the danger of AIDS. These programs explain how to avoid infection by using condoms (a type of birth control) and taking other steps. Scientists have developed drugs that dramatically lower the amount of HIV, the virus that causes AIDS, in the body. Other drugs greatly decrease the risk that mothers will transmit HIV to their newborns during birth. Governments, private foundations, universities, and drug companies spend huge amounts of money to find even better methods of prevention and treatment.

In the poor countries of Africa, however, AIDS remains an unchecked epidemic. There is little money to educate people about avoiding AIDS, and many people cannot afford condoms. Poor African countries have difficulty paying for AIDS drugs, which can cost thousands of dollars per year for each patient. There is no money for research.

Availability of doctors and medical facilities is another area in which rich and poor countries differ greatly. In many rich countries, experts believe that there is an oversupply of doctors and that too many of them are specialists. By contrast, many poor countries have a serious shortage of doctors of any kind.

Financing advanced medical care.

In developed countries, doctors, citizens, insurers, and policymakers struggle to continue funding high levels of health care. Canada, the United Kingdom, and other countries with national health care try to maintain the quality of their systems and keep them fair. People are growing older and developing age-related ailments that are expensive to treat. At the same time, researchers are gaining new insights into genes and other basic biological processes. These insights may lead to treatments that are more effective but cost even more than current care. Experts are debating how national health systems can continue to pay for advanced health care for everyone.

The United States faces particularly difficult health care choices. Because there is no national U.S. health system, different groups of citizens already receive different levels of care. American policy experts are debating ways to eliminate health care gaps among citizens or keep the gaps from widening.

Private insurance is the most common U.S. health care plan and thus controls huge amounts of health care money. Because of its importance, private insurance heavily influences U.S. health care policy. Many insurance companies and managed care plans operate as businesses. Their chief goal is to make money for their owners and investors, who may not be doctors or medical workers. Some people wonder if such business principles as competition and profit making now play too large a role in U.S. medicine.

Education of doctors is one area in which business principles have a growing influence. Because many insurance plans control costs by emphasizing primary care, there is reduced need for medical specialists. Many specialty training programs have been cut back, merged with similar programs, or eliminated.

Increased focus on saving money also affects how hospitals pay for educating doctors. Hospitals in which doctors receive their higher clinical training are called teaching hospitals. These hospitals traditionally made up for the cost of training doctors by charging more for care than did hospitals without training responsibilities. Insurance plans paid the higher fees because such hospitals provided the most advanced care available. But many managed care systems are unwilling to pay teaching hospitals’ higher fees. Many experts worry about the effect that this refusal will have on doctor training.

Another factor affecting the cost of U.S. health care is malpractice. American patients are among the most likely in the world to sue their doctors or hospitals. Most people agree that patients need protection against negligent health care. But most experts feel that the high likelihood of malpractice suits drives up the cost of medical care. Doctors and hospitals must buy expensive malpractice insurance, and the cost is passed along to patients as higher fees. Many doctors feel they need to practice defensive medicine—that is, medicine determined by the threat of a malpractice suit rather than by medical judgment. Experts are debating ways to reduce the effect of malpractice on medical costs.

Managed care further complicates malpractice. Managed care systems conduct reviews of doctors’ treatment plans, and the system may refuse to approve treatment. In many cases, however, the individual doctor rather than the managed care organization remains legally responsible for the treatment decision.

The challenge of genetics.

During the 1980’s and 1990’s, knowledge about genes and how they work began to play an important role in medicine. Scientists identified particular genes that increase risk of many diseases, including breast cancer, colon cancer, and certain brain disorders. In the early 2000’s, an international scientific effort called the Human Genome Project and a private company called Celera Genomics Corporation essentially completed a project to determine the sequence, or order, of chemical compounds in the human genome. A genome is the complete set of genes in a cell. Scientists hope to use the information from the project to determine how genes in the human body function in health and disease.

The age of genetic medicine holds great promise for new ways of preventing and treating illness. But genetic medicine also creates many challenges for doctors, patients, and societies. Identifying an abnormal gene alerts doctors and patients to watch closely for signs of the disease associated with that gene. But in most cases, testing offers little other medical benefit because few treatments yet exist for damaged genes. As a result, doctors are uncertain about when genetic tests should be used and who should be tested.

Many patients question whether the benefits of knowing their genetic flaws are sufficient to outweigh the anxiety this knowledge will bring. Patients also worry about their ability to keep information about their genetic abnormalities private. Policymakers share these fears. They worry that insurers may not want to provide insurance for people with genetic abnormalities. Another concern is that employers may try to avoid hiring these people. Societies must try to find ways to protect people from misuse of genetic information. At the same time, they want to encourage continued progress in this important new area of human knowledge.